Artificial Intelligence: Friend or Foe? It is Up to Us

In This Article

-

AI itself is not inherently dangerous. It is a powerful tool, like any technology, whose impact depends on the hands that manipulate it. AI can be considered as a super-intelligent student, constantly learning, and evolving. While the potential of AI and computer technology to revolutionize our world is undeniable, it is crucial to acknowledge the potential pitfalls that accompany this progress.

-

Despite their neutral appearance, discriminatory algorithms exacerbate existing inequalities across various sectors like insurance, advertising, education, and law enforcement, and often result in outcomes that disadvantage the economically challenged and racially marginalized, effectively reinforcing systemic biases.

-

From housing discrimination to unfair hiring practices, the documentary “Coded Bias” exposes how algorithms can perpetuate inequalities in crucial areas like healthcare, credit scoring, education, and the legal system. The lack of legal frameworks for AI as the documentary argues, allows such human rights violations to occur unchecked.

From remarkably accurate phone predictions to self-navigating robot vacuums, artificial intelligence (AI) subtly weaves itself into the fabric of our daily lives. Yet, amidst the buzz and intrigue, a crucial paradox emerges: Is AI a torch illuminating the path of progress, or a looming shadow threatening our future? Is it all sunshine and rainbows, or should we fear it is on the path to world domination?

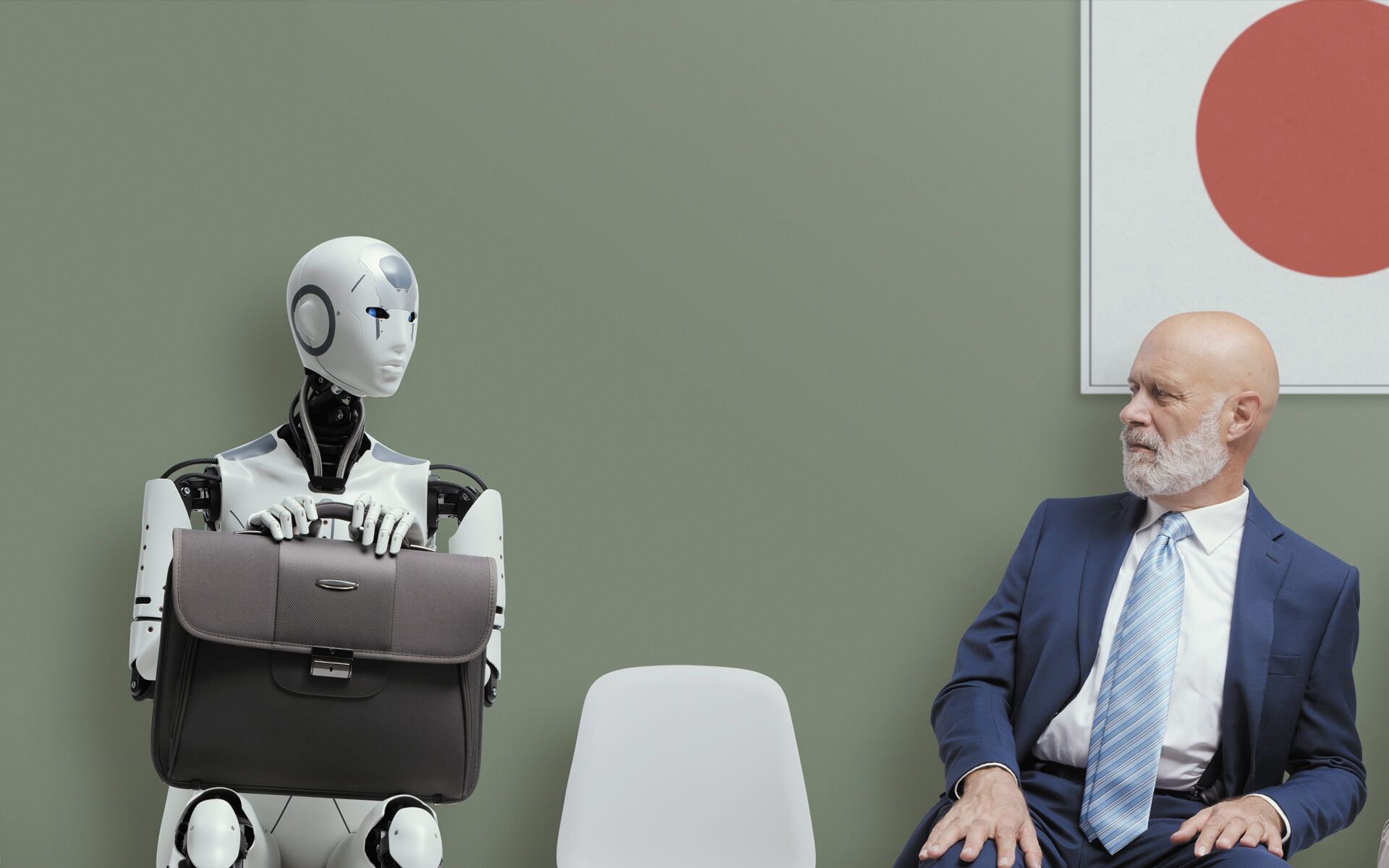

The truth, as is often the case, lies somewhere between these fantastical narratives and dystopian anxieties. Unlike the villains of fiction, AI itself is not inherently dangerous. It is a powerful tool, like any technology, whose impact depends on the hands that manipulate it. AI can be considered as a super-intelligent student, constantly learning, and evolving. This potential unleashes a world of possibilities including but not limited to self-driving cars navigating roads with unparalleled safety, or AI doctors analyzing medical scans with superhuman accuracy. However, like any student, AI can make mistakes. Some worry about potential biases leading to unfair decisions, while others express concerns about job displacement by automated systems. These are valid anxieties, highlighting the critical need for open discourse and responsible development.

This can be a journey into the fascinating world of AI, seeking not just to uncover its exciting possibilities, but also to confront the challenges it presents. By understanding the core of AI and its capabilities, we can all become active participants in shaping its future, ensuring it benefits everyone, not just a select few. This can be achieved together, dispelling misconceptions, and charting a course for a responsible and ethical future with AI.

Shaping the future responsibly: ethical concerns

It is natural to have concerns, especially when something sounds as powerful as AI. While the potential of AI and computer technology to revolutionize our world is undeniable, it is crucial to acknowledge the potential pitfalls that accompany this progress. This section delves into the ethical concerns voiced by prominent thinkers and experts, exploring the challenges facing AI and computing and examining solutions for navigating them responsibly.

Weapons of “math destruction”: inequalities

Cathy O’Neil, Ph.D., is the CEO of ORCAA, an algorithmic auditing company, and a member of the Public Interest Tech Lab at the Harvard Kennedy School [1]. Cathy O'Neil's 2016 book, Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy [2], critically examines the impact of big data algorithms on society, particularly their role in exacerbating existing inequalities across various sectors like insurance, advertising, education, and law enforcement. The work, which was a contender for the 2016 National Book Award for Nonfiction but did not make the final cut, has received widespread acclaim, and secured the Euler Book Prize [3]. O'Neil, leveraging her expertise in mathematics, delves into how these algorithms, despite their neutral appearance, often result in outcomes that disadvantage the economically challenged and racially marginalized, effectively reinforcing systemic biases.

She highlights the detrimental impact of discriminatory algorithms, illustrating how they can deny opportunities to the underprivileged, such as a poor student being denied a loan due to perceived risks associated with their address or residence. This perpetuates a cycle of poverty, undermining democratic values by favoring the privileged and disadvantaging the less fortunate. O'Neil argues these algorithms, which she terms Weapons of Math Destruction, are opaque, unregulated, and challenging to oppose, and their scalability means biases are magnified, affecting larger segments of the population and entrenching discrimination.

Algorithms of oppression: how search engines reinforce racism

Safiya Umoja Noble is a notable figure in this domain as the Director of the Center on Race and Digital Justice and Co-Director of the Minderoo Initiative on Tech and Power at the UCLA Center for Critical Internet Inquiry. Dr. Noble is also a board member of the Cyber Civil Rights Initiative, serving those vulnerable to online harassment, and provides expertise to a number of civil and human rights organizations. [4].

Professor Noble is the author of the best-selling book on racist and sexist algorithmic harm in commercial search engines, entitled Algorithms of Oppression: How Search Engines Reinforce Racism (NYU Press) [5], which has been widely reviewed in scholarly and popular publications [4]. She delves into the impact of search engine algorithms within the realms of information science, machine learning, and human-computer interaction. Noble's journey to this work began after observing biased search results while studying sociology and later pursuing a master’s in library and information science. The book, inspired by the problematic search results related to "black girls," critiques the racist and sexist biases embedded in search engines. Noble, who transitioned into academia, further explored these themes, culminating in the publication of this critical analysis in 2018 [6].

For six years, Dr. Noble dove into the world of Google search, uncovering a disturbing truth: Search results can be biased! Her book argues that these algorithms mirror and amplify racism in society, harming women of color in particular. She compares the search results of "Black girls" and "white girls." She shows how the algorithm favors whiteness, linking it with positive terms. This bias leads to negative consequences like unfair profiling and economic disadvantages for marginalized groups. Examining race and gender together is a unique approach in the book. Her approach emphasizes how different women experience oppression in unusual ways. The book suggests that this bias is not limited to search engines but exists in other technologies such as facial recognition.

While many recent technologies claim to be unbiased, Dr. Noble argues they often "repeat the problems they're supposed to solve." Her work reminds us to be critical of the technology we use and fight against the biases it may hold. Only then can we create a truly fair and just digital world.

Automating inequality

Virginia Eubanks is an American political scientist, professor, and author studying technology and social justice. Previously Eubanks was a Fellow at New America researching digital privacy, economic inequality, and data-based discrimination [7].

Eubanks has written and co-edited multiple award-winning books, the most well-known being Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor [8]. Her book uncovers the harm generated by computer algorithms to replace human decisions and how they negatively impact the financially disadvantaged. She clearly depicts how automated systems in public services disproportionately affect the poor and working class. By coining the term "digital poorhouse," she criticizes the automation of welfare and other services, calling for a humane approach to technology policy that prioritizes social responsibility.

Coded bias

Coded Bias [9] is a documentary by Shalini Kantayya displayed at the 2020 Sundance Film Festival. This thought breaking documentary explores the fallout of MIT Media Lab researcher Joy Buolamwini’s discovery that facial recognition does not see dark-skinned faces accurately, and her journey to push for the first-ever legislation in the U.S. to govern against bias in the algorithms that impact us all featuring insights from notable researchers on the biases in facial recognition technology and its societal implications. Kantayya's journey from discovering algorithms through O'Neil's book Weapons of Math Destruction [2] to exploring Joy Buolamwini's research underscores the critical need for awareness and action against biased AI [10].

This documentary explores the troubling reality of bias embedded within artificial intelligence (AI). It all began with MIT researcher Joy Buolamwini's startling discovery: facial recognition systems could not recognize her face. Driven to understand this failure, she unraveled a deeply concerning truth – these systems were biased, malfunctioning specifically with darker skin tones [9], [10].

Coded Bias goes beyond this unsettling revelation, uncovering the broader impact of AI bias on marginalized communities. From housing discrimination to unfair hiring practices, the film exposes how algorithms can perpetuate inequalities in crucial areas like healthcare, credit scoring, education, and the legal system. The lack of legal frameworks for AI as the documentary argues, allows such human rights violations to occur unchecked [9], [10].

Algorithmic Justice League

Fueled by her findings, Buolamwini did not just raise awareness; she took action. Testifying before Congress and founding the Algorithmic Justice League (AJL) in 2016 [11]. She became a powerful voice fighting for responsible AI development and a fairer digital future for all. Through research, art, and advocacy, the AJL is dedicated to challenging biases in AI and aims to foster a more equitable digital world, recognized by Fast Company in 2021 as one of the most innovative AI organizations globally [11].

Navigating the ethical landscape of AI

We have all seen the cool AI stuff in movies: robots doing chores, computers reading minds, maybe even flying cars. However, there has been a lot of buzz about the dark side of AI as mentioned above, like biased algorithms making unfair decisions or even robots taking our jobs. So, should we be in love with AI or scared?

The answer is: IT DEPENDS! AI is a powerful tool, like a fancy new hammer. In the wrong hands, it could cause damage. But in the right hands, it can build amazing things. The key is making sure those hands are responsible and ethical.

Where are the gaps?

The sources mentioned paint a clear picture and awareness that AI's potential pitfalls are growing, but the solutions remain in their embryonic stages. Acknowledging the issues is one thing; the real challenge lies in translating that awareness into action. Humanity is still figuring out how to use this hammer responsibly. AI itself is not inherently dangerous. The danger lies in the intent behind its development and deployment. Just as fire, nuclear energy, and biological research hold immense potential for both good and harm, ethical considerations and responsible use are paramount. The emphasis should be on the emergency of action, urging sensible, compassionate, and conscious people to step forward and contribute to shaping a beneficial future for humanity. This call resonates deeply for all of us. To ensure AI serves as a force for good, we must actively participate in shaping its development and implementation, starting with the actions outlined here.

Here is where we can step in:

Deeper training of the builders: The teams behind the AI systems are the key to a better future. Equipping developers with cultural sensitivity and fostering diverse teams who understand various cultures and backgrounds are crucial steps towards mitigating unintentional biases. This helps them avoid accidentally building biases into their products, just like learning carpentry helps you avoid hammering your thumb!

Diversity and inclusivity of the data: AI is like textbooks that only tell one side of the story when AI uses limited data. We need to include different perspectives and continuously update the information, just like keeping the applications of smart phones or devices up to date. Expanding educational datasets to encompass various perspectives and regularly incorporating feedback from diverse groups will help ensure inclusivity and avoid perpetuating censorship.

Being transparent and opening the black box: Imagine a magic trick where you cannot see how the illusion works. That is how some AI systems feel. Making them more transparent, like explaining how they make decisions, and demystifying decision-making algorithms through transparency leads to trust and allows for scrutiny which is critical for addressing potential injustices.

Broadening governance and sharing the power: If only some privileged entities have the power to decide the rules of a game, it is not fair. Unfortunately, we can observe the same unfairness for the rules of AI. Diverse groups must be involved in making decisions, not just a few powerful companies or countries. Moving away from unilateral control towards multi-stakeholder involvement in regulations will ensure diverse perspectives are considered and prevent undue influence by specific entities.

Final Words

The discussion around AI ethics will undoubtedly continue to evolve. By actively engaging in these conversations, supporting initiatives that align with our values, and holding developers and regulators accountable, we can work towards a future where AI empowers and uplifts, not divides and harms.

AI is not bad in itself. It is like fire – it can cook your dinner or burn down your house, depending on how you use it. The important thing is to be aware of the risks and work together to make sure AI benefits everyone, not just a few privileged classes.

So, let’s roll up our sleeves, grab that metaphorical AI hammer, and build a future where this technology works for us, not against us. It is up to all of us to make sure AI becomes a force for good, not a sci-fi nightmare.

Remember, the power lies in collective action. We can definitely build the future we want, together.

References

- https://cathyoneil.org

- O’Neil, C. (2017). Weapons of Math Destruction. Harlow, England: Penguin Books.

- https://en.wikipedia.org/wiki/Weapons_of_Math_Destruction

- https://safiyaunoble.com/

- Noble, S. U. (2018). Algorithms of oppression: How search engines reinforce racism.New York University Press.

- https://en.wikipedia.org/wiki/Algorithms_of_Oppression

- https://en.wikipedia.org/wiki/Virginia_Eubanks

- Eubanks, V. (2018). Automating inequality: how high-tech tools profile, police, and punish the poor. First edition. New York, NY, St. Martin's Press.

- https://www.codedbias.com/

- https://en.wikipedia.org/wiki/Coded_Bias

- https://www.ajl.org/